Network Latency

From Computing and Software Wiki

(Much new content in gaming section) |

(Finalising) |

||

| (7 intermediate revisions not shown) | |||

| Line 16: | Line 16: | ||

===Distance=== | ===Distance=== | ||

Communication is naturally limited by the speed of light. Therefore the round-trip time of packets is unavoidably linked to the distance over which the packets are being sent, subject to the laws of [http://en.wikipedia.org/wiki/Relativity Relativity]. This is particularly an issue in the field of [http://en.wikipedia.org/wiki/Space_Exploration Space Exploration], where the round-trip time of communication is commonly measured in minutes or hours. Because of this, [http://en.wikipedia.org/wiki/Rover_(space_exploration) rovers] must be programmed with some level of [[artificial intelligence]] so that moment-to-moment decisions can be made autonomously. | Communication is naturally limited by the speed of light. Therefore the round-trip time of packets is unavoidably linked to the distance over which the packets are being sent, subject to the laws of [http://en.wikipedia.org/wiki/Relativity Relativity]. This is particularly an issue in the field of [http://en.wikipedia.org/wiki/Space_Exploration Space Exploration], where the round-trip time of communication is commonly measured in minutes or hours. Because of this, [http://en.wikipedia.org/wiki/Rover_(space_exploration) rovers] must be programmed with some level of [[artificial intelligence]] so that moment-to-moment decisions can be made autonomously. | ||

| + | |||

| + | Due to communication over large distances, [[satellite]] [[internet]] connections naturally have a large amount of latency regardless of bandwidth. | ||

==Measuring Latency== | ==Measuring Latency== | ||

| - | + | [[Image:Ping.JPG|thumb|right|350px|Using Ping to calculate network latency in Microsoft Windows XP.]] | |

| + | Network conditions constantly fluctuate, and so measuring the latency in transmitting a packet between the same two endpoints multiple times can have differing results. Because of this, the latency of any single packet may not be a meaningful measurement. Another issue is the fact that any latency measurements exchanged between hosts will themselves be subject to delay on the network. | ||

| - | A simple solution to both of these problems is calculating latency using average round-trip time. | + | A simple solution to both of these problems is calculating latency using average round-trip time. Round-trip communication times can be measured from a single host, and taking the average latency over several packets provides a reasonable estimate of the delay needed for future packets to arrive. Extra steps may need to be taken, as dropped packets and temporary disconnections can skew the average latency measurement much higher. Also, the time a host takes to process packets is typically not included when calculating a round-trip time, but users and applications waiting for data will experience this delay just the same. |

| - | + | Using [[Ping]] is a simple way to find your latency relative to some host address. | |

| - | + | ||

| - | + | ||

| - | + | ||

| - | [[ | + | |

| - | + | ||

| - | + | ==Issues== | |

| + | ===Streaming media=== | ||

| + | Latency in [[streaming]] video or audio is not itself an issue, and (ideally) only delays receipt by an absolute amount roughly equal to the connection's average latency. Sudden spikes in latency may cause the stream to be interrupted, though [[buffering]] is effective at preventing this to a point. Latency however becomes an issue when communication or interactivity is involved. Live television broadcasts have noticeable latency due to the nature of their transmission, which becomes apparent during satellite interviews and the like. This delay is a common problem with [[VoIP]] services such as [http://www.skype.com/intl/en/ Skype] | ||

| - | + | ===Real-Time Gaming=== | |

| + | [[Image:Lag_compensation.jpg|thumb|right|450px|An example of lag compensation in Counter-Strike: Source. The man running left shows the player's actual position on the server, whereas the coloured boxes show their position for gameplay purposes. The red boxes are what other clients see, and the blue boxes are the server's lag compensated position.]] | ||

| + | [[Real-time]] online multiplayer games suffer from network latency, causing a delay between the players' input and the game's response. Modern online games are typically designed to use a [[client-server networking]] model, though [[peer-to-peer]] implementations are also possible. Latency means that the players' instructions to the game do not reach the server instantaneously, and the server's description of the current gamestate is slightly outdated by the time it reaches the players. During the infancy of online gaming the only workaround was for players to manually compensate for the delay by performing actions earlier than actually desired. | ||

| - | + | Modern [[game engines]] such as Valve Software's Source Engine implement a number of lag compensation techniques<sup>[1]</sup>. To eliminate the perceived delay on a client, character animations and other responses which do not affect gamestate are played immediately. Actions which do affect the gamestate (such as a player walking forward) have their outcomes predicted on the client's end as if they have already happened, causing the player to immediately see themselves walking forward. The client is therefore seeing a real-time approximation of the server's current gamestate at any given moment. If any discrepancy is found once the server finally responds to the client, the client adjusts itself to match the server's new state in order to avoid [[desynchronization]] (this however can cause a sudden "jerking" effect known as "rubber-banding"). This method is known as ''client-side prediction''. | |

| - | + | ||

| - | + | In order to mitigate the discrepancies between the server and clients, latency can be factored into critical gamestate calculations. For example, suppose the server wants to check if player A shoots player B. Since the clients' predictions are not perfectly accurate, clients can never be sure of the server's exact gamestate, and a [[naive]] collision check would make it impossible to hit player B consistently. Instead, the server looks back in time (according to player A's round-trip latency) and checks to see if player A's hit would be successful according to that state. Though some mathematical imprecision may result, this much more closely aligns the events of the game with what each player is seeing (shown on the right). | |

| - | + | ||

| - | + | ||

| - | == | + | ==Potential Uses== |

| + | Though reducing network latency is nearly always desireable, it is possible for applications to take advantage of its existence in order to provide services not otherwise possible. An article in Computer Networks journal describes a method whereby it is possible to implement a lag compensation system in an online multiplayer game using network latency that simulates a form of [[time dilation]].<sup>[2]</sup> The end result is that through using what are called ''local perception filters'' a fully synchronized game can appear to be going in slow motion for short periods to some players but not others. If implemented naively, this disparity in game speeds would cause players to desynchronize. | ||

| + | ==References== | ||

| + | *1. [http://developer.valvesoftware.com/wiki/Source_Multiplayer_Networking Source Engine Multiplayer Networking] | ||

| + | *2. [http://www.sciencedirect.com/science?_ob=ArticleURL&_udi=B6VRG-4G94G99-2&_user=10&_rdoc=1&_fmt=&_orig=search&_sort=d&view=c&_acct=C000050221&_version=1&_urlVersion=0&_userid=10&md5=64b8d82ca3ba63232b0e8712ce061840 Realizing the bullet time effect in multiplayer games with local perception filters] | ||

| + | * [http://compnetworking.about.com/od/speedtests/a/network_latency.htm About.com, Network Bandwidth and Latency] | ||

| + | * [http://developer.valvesoftware.com/wiki/Lag_Compensation Valve development wiki page on lag compensation] | ||

| - | == | + | ==See Also== |

| - | [[ | + | *[[Packet-switching]] |

| - | + | *[[Multiplayer Games]] | |

| + | *[[Ping]] | ||

| + | *[[Computer Networking]] | ||

| + | *[[Bandwidth]] | ||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

==External Links== | ==External Links== | ||

| + | *[http://www.michaelnygard.com/blog/2007/11/architecting_for_latency.html Wide Awake Developers, Architecting for Latency] | ||

| + | *[http://www.wikihow.com/Test-Network-and-Internet-Latency-(Lag)-in-Microsoft-Windows wikiHow, Instructions for testing latency in Microsoft Windows] | ||

Current revision as of 02:31, 13 April 2009

As an Engineering term, latency refers to the span of time taken from when some action is initiated to when it actually takes effect.

In the context of packet-switching networks, latency can refer to any of the following:

- The time from when a packet is sent to when that packet reaches its destination

- The round-trip time of a packet

- The perceived delay in communication between hosts

In online multiplayer games, the round-trip time of a packet is commonly known as ping.

Contents |

Causes

Traffic Congestion

Any packets which are prevented from reaching their destination for any period of time will result in an increase in latency. Heavy network traffic can therefore increase latency, as bandwidth limitations and routing issues contribute to the time that a message spends in transit.

Application performance

Since every packet must at some point be created and sent by an application, any time taken in processing the information necessary to create or read a packet will cause additional latency. The perception of latency is also created when communication is delayed due to packets being dropped (from events such as packet collisions), because the user will only see the time from when the request was sent to when the message was successfully received.

Distance

Communication is naturally limited by the speed of light. Therefore the round-trip time of packets is unavoidably linked to the distance over which the packets are being sent, subject to the laws of Relativity. This is particularly an issue in the field of Space Exploration, where the round-trip time of communication is commonly measured in minutes or hours. Because of this, rovers must be programmed with some level of artificial intelligence so that moment-to-moment decisions can be made autonomously.

Due to communication over large distances, satellite internet connections naturally have a large amount of latency regardless of bandwidth.

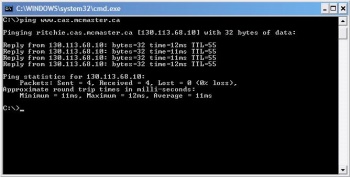

Measuring Latency

Network conditions constantly fluctuate, and so measuring the latency in transmitting a packet between the same two endpoints multiple times can have differing results. Because of this, the latency of any single packet may not be a meaningful measurement. Another issue is the fact that any latency measurements exchanged between hosts will themselves be subject to delay on the network.

A simple solution to both of these problems is calculating latency using average round-trip time. Round-trip communication times can be measured from a single host, and taking the average latency over several packets provides a reasonable estimate of the delay needed for future packets to arrive. Extra steps may need to be taken, as dropped packets and temporary disconnections can skew the average latency measurement much higher. Also, the time a host takes to process packets is typically not included when calculating a round-trip time, but users and applications waiting for data will experience this delay just the same.

Using Ping is a simple way to find your latency relative to some host address.

Issues

Streaming media

Latency in streaming video or audio is not itself an issue, and (ideally) only delays receipt by an absolute amount roughly equal to the connection's average latency. Sudden spikes in latency may cause the stream to be interrupted, though buffering is effective at preventing this to a point. Latency however becomes an issue when communication or interactivity is involved. Live television broadcasts have noticeable latency due to the nature of their transmission, which becomes apparent during satellite interviews and the like. This delay is a common problem with VoIP services such as Skype

Real-Time Gaming

Real-time online multiplayer games suffer from network latency, causing a delay between the players' input and the game's response. Modern online games are typically designed to use a client-server networking model, though peer-to-peer implementations are also possible. Latency means that the players' instructions to the game do not reach the server instantaneously, and the server's description of the current gamestate is slightly outdated by the time it reaches the players. During the infancy of online gaming the only workaround was for players to manually compensate for the delay by performing actions earlier than actually desired.

Modern game engines such as Valve Software's Source Engine implement a number of lag compensation techniques[1]. To eliminate the perceived delay on a client, character animations and other responses which do not affect gamestate are played immediately. Actions which do affect the gamestate (such as a player walking forward) have their outcomes predicted on the client's end as if they have already happened, causing the player to immediately see themselves walking forward. The client is therefore seeing a real-time approximation of the server's current gamestate at any given moment. If any discrepancy is found once the server finally responds to the client, the client adjusts itself to match the server's new state in order to avoid desynchronization (this however can cause a sudden "jerking" effect known as "rubber-banding"). This method is known as client-side prediction.

In order to mitigate the discrepancies between the server and clients, latency can be factored into critical gamestate calculations. For example, suppose the server wants to check if player A shoots player B. Since the clients' predictions are not perfectly accurate, clients can never be sure of the server's exact gamestate, and a naive collision check would make it impossible to hit player B consistently. Instead, the server looks back in time (according to player A's round-trip latency) and checks to see if player A's hit would be successful according to that state. Though some mathematical imprecision may result, this much more closely aligns the events of the game with what each player is seeing (shown on the right).

Potential Uses

Though reducing network latency is nearly always desireable, it is possible for applications to take advantage of its existence in order to provide services not otherwise possible. An article in Computer Networks journal describes a method whereby it is possible to implement a lag compensation system in an online multiplayer game using network latency that simulates a form of time dilation.[2] The end result is that through using what are called local perception filters a fully synchronized game can appear to be going in slow motion for short periods to some players but not others. If implemented naively, this disparity in game speeds would cause players to desynchronize.

References

- 1. Source Engine Multiplayer Networking

- 2. Realizing the bullet time effect in multiplayer games with local perception filters

- About.com, Network Bandwidth and Latency

- Valve development wiki page on lag compensation