Different measures for evaluation

From Computing and Software Wiki

(→'''Efficiency''') |

(→'''Learnability''') |

||

| (46 intermediate revisions not shown) | |||

| Line 1: | Line 1: | ||

== Measures of Evaluating Human-Computer Interfaces == | == Measures of Evaluating Human-Computer Interfaces == | ||

| - | + | ||

==='''Utility'''=== | ==='''Utility'''=== | ||

| - | |||

| - | |||

| - | |||

| - | test | + | The fundamental goals of HCI is "to develop or improve the '''safety''', '''utility''', '''effectiveness''', '''efficiency''', and '''usability''' of systems that include computers” (Interacting with Computers, 1989, p. 3). '''Utility''' means that the product can reach a certain goal or to perform a certain task. In another word, utility means the functionality of the system. |

| + | |||

| + | |||

| + | For any interface, the basic requirement is to achieve a certain goal properly as request. Thus, when utility of an interface is evaluated, three questions are mostly concerned: | ||

| + | 1) what is the purpose of the system and items in the interface; | ||

| + | 2) how the main system performs for the certain task; | ||

| + | 3) how the accessory functions perform. | ||

| + | |||

| + | |||

| + | For the first question, manufacturer and user will focus on both the purpose of the system and the purpose of each item designed. The company and user may review the goal of the system. Widget(menu, button, etc.) is also evaluated for its purpose, and may be removed or added as demand. | ||

| + | |||

| + | For the question two, the performance of the whole system will be considered. Whether it will perform properly as request and designed will be judged. The HCI may be tested either in a simulation test environment by expert, or may be evaluated by real user. | ||

| + | |||

| + | Also other widgets in the interface should be considered. Some widgets may not related to the major task of the system but still very import (such as help or warning). These functions cannot be ignored in the utility evaluation. | ||

| + | |||

| + | |||

| + | Concerning the three questions above, '''GOMS''', '''Expert reviews''' and '''User-based tesing'''(especially '''quantitative measures''') are most common methods to evaluate utility of an interface. In addition, '''surveys''' can also evaluate the functionality of the HCI. | ||

| + | |||

| + | |||

| + | Utility is the essential requirement of the HCI. Evaluation in other aspects are improtant as well. | ||

==='''Efficiency'''=== | ==='''Efficiency'''=== | ||

| Line 14: | Line 30: | ||

For a given HCI, company and users usually more concern about the following questions: | For a given HCI, company and users usually more concern about the following questions: | ||

| + | |||

1) How easy for new user to lean to operate the interface? | 1) How easy for new user to lean to operate the interface? | ||

| + | |||

2) How much time and effort it takes an operator to finish a given mission without any supervision? | 2) How much time and effort it takes an operator to finish a given mission without any supervision? | ||

| + | |||

3) And more important, what is the improvement on the productivity by using the HCI? | 3) And more important, what is the improvement on the productivity by using the HCI? | ||

| + | |||

If the user feels the HCI too hard to learn, or not easy to use, software will not to be accepted by the users no matter how well it is. Good User Interface Design can make a product easy to understand and use, which results in greater user acceptance. | If the user feels the HCI too hard to learn, or not easy to use, software will not to be accepted by the users no matter how well it is. Good User Interface Design can make a product easy to understand and use, which results in greater user acceptance. | ||

| - | + | In HCI, efficiency is measured as the resources expended by the user in relation to the accuracy and completeness of goals achieved. High efficiency is achieved when the user finish the mission with few resources in terms of time cost, leaning cost as well as maintain cost, etc. | |

| - | ===''' | + | There are several criteria that will be used to measure the efficiency of the Human computer interface. The criteria include the design, usability and performance of the HCI. The Data Envelopment Analysis (DEA) technique can be used to meansure the HCI efficiency. |

| - | '' | + | |

| - | ---- | + | There are design to improve the efficiency of the HCI |

| - | + | ||

| + | 1)Consistent screen design | ||

| + | |||

| + | 2)Menu not too long, not contains too many items | ||

| + | |||

| + | 3)Enable Help function, which should contain the procedure for common issues | ||

| + | |||

| + | 4)Animation and image could be used to simulated the realworld situation | ||

| + | |||

| + | 5)Enable the error message in order to warn as well as offer useful suggestion | ||

| + | |||

| + | 6)Design similar to other related software | ||

| + | |||

| + | 7)provide online help and tutorial | ||

| + | |||

| + | ==='''Learnability [1]'''=== | ||

| + | |||

| + | |||

| + | ===='''Introduction'''==== | ||

| + | |||

| + | Using software applications to accomplish a certain goals or tasks requires | ||

| + | some amount of learning. This can vary with the user's experience level, be | ||

| + | it basic, intermediate/casual, or expert. This learning usually does not | ||

| + | happen instantaneously, it normally requires some amount of practice using | ||

| + | the application, figuring out how it works, and how to make the application | ||

| + | do what the user wants to accomplish. | ||

| + | |||

| + | ====='''Definition'''===== | ||

| + | |||

| + | The basic definition of learnability is the extent to which something can | ||

| + | be learned. In the case of software metrics, it is the amount of time and effort | ||

| + | required to become proficient at using the application. It is an often ignored | ||

| + | metric, which is often cast aside in product evaluations, but it is important to | ||

| + | know how quickly users develop certain levels of proficiency in using the | ||

| + | application. It is important because it helps organizations/users know how long it | ||

| + | should/may take for them to become proficient with the application when | ||

| + | it is deployed/installed. | ||

| + | |||

| + | ===='''Role of Short/Long Term Memory in Learnability'''==== | ||

| + | |||

| + | One of the main factors to learnability is memory. If the user | ||

| + | is required to use the application on a daily, or hourly basis, the application | ||

| + | instructions will quickly move from short-term memory into long-term memory; with | ||

| + | a resultant overall increase in proficiency. | ||

| + | However, if it is a less-used application, that is only used perhaps once per month, | ||

| + | memory is detrimental, as the user may have to re-learn the application each | ||

| + | time they use it. This is due to the fact that how to use the application | ||

| + | has not had a chance to embed itself into the user's long term memory due to | ||

| + | infrequent use. | ||

| + | |||

| + | ===='''Results of Learnability Studies'''==== | ||

| + | |||

| + | The key result of a learnability study is to determine how long it takes | ||

| + | for users to become proficient in accomplishing a particular goal or task | ||

| + | using the new application. Through this, the amount of time and effort [and | ||

| + | thereby cost] required can be estimated. In a learnability study, it is important | ||

| + | to examine the inital exposure to the application, where their obviously might | ||

| + | be some major and minor difficulties in using the application. However, it is | ||

| + | even more important to record subsequent exposures to determine at what rate | ||

| + | the user is improving in using the application to accomplish their goals/tasks. | ||

| + | The key is to determine when maximum user efficiency is reached. | ||

| + | |||

| + | ====='''Collecting and Measuring Learnability Data'''===== | ||

| + | |||

| + | Collecting learnability data is the same as collecting data for other | ||

| + | metrics. However, the analysis would only include metrics which | ||

| + | specifically focus on efficiency. These metrics include: time-on-task, | ||

| + | errors, number of steps, or task success, given a specified duration | ||

| + | of time. As learning occurs, it is expected that efficiency would | ||

| + | improve. | ||

| + | |||

| + | In regards to duration, here are three sampling methods, each | ||

| + | differ in duration depending on the desired goal of the | ||

| + | analysis. Each method also describes how memory may/may not | ||

| + | have an effect on the accuracy of the data collected. | ||

| + | |||

| + | ''Trials within same session'': | ||

| + | The user performs tasks, or task sets, consecutively, without | ||

| + | interruption. This method does not take into account memory loss. | ||

| + | |||

| + | ''Trials within same session, with breaks'': | ||

| + | The user performs tasks, or task sets, with 'distractor' tasks | ||

| + | placed in between in order to determine how much proficiency | ||

| + | is retained by the user in between tasks/task sets. | ||

| + | |||

| + | ''Trials between sessions'': | ||

| + | The user performs tasks, or task sets, over multiple sessions, | ||

| + | with day(s) in between each session. This is sometimes considered | ||

| + | to be the least practical, but most realistic, if the application | ||

| + | is only used occasionally. | ||

| + | |||

| + | When attempting to determine the number of trials required, it is | ||

| + | preferred to overestimate the trials required. This insures | ||

| + | that a more complete dataset is provided for analysis. | ||

| + | |||

| + | ====='''Analyzing and Presenting Learnability Data'''===== | ||

| + | |||

| + | The common way to analyze and present learnability data is to focus on one | ||

| + | performance metric [such as number of errors] for a specific task. It is | ||

| + | also possible to aggregate the metric across all tasks in order to provide | ||

| + | a much broader picture of the overall learnability of the application. | ||

| + | |||

| + | In this example, the metric chosen was time on task. Below is a hypothetical | ||

| + | result graph for a time on task study. | ||

| + | |||

| + | [[Image:Timeontask.JPG]] | ||

| + | |||

| + | The main aspects of the graph to consider are the following: | ||

| + | |||

| + | ''Line Slope'': Also known as the learning curve, the slope of the line should ideally | ||

| + | be flat and decreasing [indicating that both learning is taking place and efficiency | ||

| + | is increasing]. | ||

| + | |||

| + | ''Asymptote'': Where the line 'flattens out'. This indicates how long it takes | ||

| + | for the maximum level of efficiency to be acheived [how long it takes for a user to | ||

| + | go from 'novice' to 'expert']. | ||

| + | |||

| + | ''Delta-Y'': The difference between MAX(Y) and MIN(Y). This value indicates approximately | ||

| + | how much learning is required for the user to reach maximum performance. If the gap | ||

| + | is small, this indicates that the application is relatively easy to learn and use. If | ||

| + | the gap is large, this indicates the application requires a great deal of time and effort | ||

| + | for the user to become proficient in using the application. | ||

==='''User Satisfaction'''=== | ==='''User Satisfaction'''=== | ||

| - | - | + | However the user satisfaction with a human-computer interface can be seen as a special case of the user satisfaction with the whole system, it is the most important part. No matter how good the system is, with discouraging, hostile or even wrong interface in eyes of the users, it will never be used effectively. Even according to the international standard on usability[2], the three pillars of usability are efficiency, effectiveness and user satisfaction. |

| - | + | ||

| - | ===''' | + | User satisfaction evaluation is always based on survey among the end users. It can be performed in form of interviews or questionaires. The group of questioned users should contain all the possible kinds of users (in all the possible senses), otherwise such evaluation will not be objective and future refinement can even make the interface worse. It is important that the group is motivated and willing to give the feedback. |

| - | ---- | + | |

| - | + | If the survey is performed using questionaires, it is useful to keep in mind that users are unlikely to employ exactly the same words to describe concepts or experiences. For example, in a experiment in which secretaries were asked to inform other secretaries of editing functions to be performed on a given document[3], it has been found that different subjects used the same word to describe simple, well-known word processing operations only 10–20% of the time. Hence, it is better to suggest words to the users and explore just they opinion about them. | |

| + | |||

| + | There are two question types[4], which are usually used – questions assesing the user's mindset using '''scales''', and '''itemized questions''', where user picks statements that he agrees with. | ||

| + | |||

| + | |||

| + | ==== Examples of assesing user's mindset using scales ==== | ||

| + | |||

| + | |||

| + | '''Example 1: Given statement. Choose the best fitting option''' | ||

| + | |||

| + | # Strongly agree | ||

| + | # Agree | ||

| + | # Neutral | ||

| + | # Disagree | ||

| + | # Strongly disagree | ||

| + | |||

| + | '''Example 2: I find the interface:''' | ||

| + | |||

| + | # Hostile 1 2 3 4 5 6 7 Friendly | ||

| + | # Vague 1 2 3 4 5 6 7 Specific | ||

| + | # Misleading 1 2 3 4 5 6 7 Beneficial | ||

| + | # Discouraging 1 2 3 4 5 6 7 Encouraging | ||

| + | |||

| + | '''Example 3: Choose better fitting word:''' | ||

| + | |||

| + | # Pleasing versus Irritating | ||

| + | # Simple versus Complicated | ||

| + | # Concise versus Redundant | ||

| + | |||

| + | ==== Examples of itemized questions ==== | ||

| + | |||

| + | '''Example 1: Choose the statements you can ygree with:''' | ||

| + | |||

| + | # I find the systems commands easy to use. | ||

| + | # I feel competent with and knowledgeable about the system commands. | ||

| + | # When writing a set of system commands for a new application, I am confident that they will be correct at on the first run. | ||

| + | # When I get an error message, I find that it is helpful in identifying the problem. | ||

| + | # I think that there are too any options and special cases. | ||

| + | |||

| + | '''Example 2: What is according to you necessary to improve''' | ||

| + | |||

| + | # Interface details <br> - Readability of characters<br> - Layout of displays<br> | ||

| + | # Interface actions <br> - shourtcuts for frequent users<br> - Help menu items<br> | ||

| + | # Task issues <br> - Appropriate terminology<br> - Reasonable screen sequencing<br> | ||

==='''References'''=== | ==='''References'''=== | ||

---- | ---- | ||

| - | + | ||

| + | |||

| + | [1] Albert, William, and Thomas Tullis. "Performance Metrics." Measuring the User | ||

| + | Experience: Collecting, Analyzing, and Presenting Usability Metrics | ||

| + | (Interactive Technologies) (Interactive Technologies). San Francisco: Morgan | ||

| + | Kaufmann, 2008. 92-97. Print.<br> | ||

| + | [2] ISO/DIS 9241-11(ISO, 1997)<br> | ||

| + | [3] Furnas, G.W., Landauer, T.K., Gomez, L.M. and Dumais, S.T., 1983. Statistical semantics: analysis of the potential performance of keyword information systems. The Bell System Technical Journal 62 6, pp. 1753–1805.<br> | ||

| + | [4] Poehlman, 2009. Lecture Notes, Software Engineering 4D03/6D03/Computer Science 4HC3, Fall 2009. McMaster University, p. 93-94.<br> | ||

| + | [5] Lindgaard, G., Dudek, C., 2002. What is this evasive beast we call user satisfaction? Elsevier Science.<br> | ||

Current revision as of 06:11, 24 November 2009

Contents |

Measures of Evaluating Human-Computer Interfaces

Utility

The fundamental goals of HCI is "to develop or improve the safety, utility, effectiveness, efficiency, and usability of systems that include computers” (Interacting with Computers, 1989, p. 3). Utility means that the product can reach a certain goal or to perform a certain task. In another word, utility means the functionality of the system.

For any interface, the basic requirement is to achieve a certain goal properly as request. Thus, when utility of an interface is evaluated, three questions are mostly concerned:

1) what is the purpose of the system and items in the interface;

2) how the main system performs for the certain task;

3) how the accessory functions perform.

For the first question, manufacturer and user will focus on both the purpose of the system and the purpose of each item designed. The company and user may review the goal of the system. Widget(menu, button, etc.) is also evaluated for its purpose, and may be removed or added as demand.

For the question two, the performance of the whole system will be considered. Whether it will perform properly as request and designed will be judged. The HCI may be tested either in a simulation test environment by expert, or may be evaluated by real user.

Also other widgets in the interface should be considered. Some widgets may not related to the major task of the system but still very import (such as help or warning). These functions cannot be ignored in the utility evaluation.

Concerning the three questions above, GOMS, Expert reviews and User-based tesing(especially quantitative measures) are most common methods to evaluate utility of an interface. In addition, surveys can also evaluate the functionality of the HCI.

Utility is the essential requirement of the HCI. Evaluation in other aspects are improtant as well.

Efficiency

Many technological product, neuclear reactor, control system, require the HCI to fuifill their technical complexity to a usable product. Technology alone may not win user acceptance and subsequent marketability. The User Experience, or how the user experiences the end product, is the key to acceptance.

For a given HCI, company and users usually more concern about the following questions:

1) How easy for new user to lean to operate the interface?

2) How much time and effort it takes an operator to finish a given mission without any supervision?

3) And more important, what is the improvement on the productivity by using the HCI?

If the user feels the HCI too hard to learn, or not easy to use, software will not to be accepted by the users no matter how well it is. Good User Interface Design can make a product easy to understand and use, which results in greater user acceptance.

In HCI, efficiency is measured as the resources expended by the user in relation to the accuracy and completeness of goals achieved. High efficiency is achieved when the user finish the mission with few resources in terms of time cost, leaning cost as well as maintain cost, etc.

There are several criteria that will be used to measure the efficiency of the Human computer interface. The criteria include the design, usability and performance of the HCI. The Data Envelopment Analysis (DEA) technique can be used to meansure the HCI efficiency.

There are design to improve the efficiency of the HCI

1)Consistent screen design

2)Menu not too long, not contains too many items

3)Enable Help function, which should contain the procedure for common issues

4)Animation and image could be used to simulated the realworld situation

5)Enable the error message in order to warn as well as offer useful suggestion

6)Design similar to other related software

7)provide online help and tutorial

Learnability [1]

Introduction

Using software applications to accomplish a certain goals or tasks requires some amount of learning. This can vary with the user's experience level, be it basic, intermediate/casual, or expert. This learning usually does not happen instantaneously, it normally requires some amount of practice using the application, figuring out how it works, and how to make the application do what the user wants to accomplish.

Definition

The basic definition of learnability is the extent to which something can be learned. In the case of software metrics, it is the amount of time and effort required to become proficient at using the application. It is an often ignored metric, which is often cast aside in product evaluations, but it is important to know how quickly users develop certain levels of proficiency in using the application. It is important because it helps organizations/users know how long it should/may take for them to become proficient with the application when it is deployed/installed.

Role of Short/Long Term Memory in Learnability

One of the main factors to learnability is memory. If the user is required to use the application on a daily, or hourly basis, the application instructions will quickly move from short-term memory into long-term memory; with a resultant overall increase in proficiency. However, if it is a less-used application, that is only used perhaps once per month, memory is detrimental, as the user may have to re-learn the application each time they use it. This is due to the fact that how to use the application has not had a chance to embed itself into the user's long term memory due to infrequent use.

Results of Learnability Studies

The key result of a learnability study is to determine how long it takes for users to become proficient in accomplishing a particular goal or task using the new application. Through this, the amount of time and effort [and thereby cost] required can be estimated. In a learnability study, it is important to examine the inital exposure to the application, where their obviously might be some major and minor difficulties in using the application. However, it is even more important to record subsequent exposures to determine at what rate the user is improving in using the application to accomplish their goals/tasks. The key is to determine when maximum user efficiency is reached.

Collecting and Measuring Learnability Data

Collecting learnability data is the same as collecting data for other metrics. However, the analysis would only include metrics which specifically focus on efficiency. These metrics include: time-on-task, errors, number of steps, or task success, given a specified duration of time. As learning occurs, it is expected that efficiency would improve.

In regards to duration, here are three sampling methods, each differ in duration depending on the desired goal of the analysis. Each method also describes how memory may/may not have an effect on the accuracy of the data collected.

Trials within same session: The user performs tasks, or task sets, consecutively, without interruption. This method does not take into account memory loss.

Trials within same session, with breaks: The user performs tasks, or task sets, with 'distractor' tasks placed in between in order to determine how much proficiency is retained by the user in between tasks/task sets.

Trials between sessions: The user performs tasks, or task sets, over multiple sessions, with day(s) in between each session. This is sometimes considered to be the least practical, but most realistic, if the application is only used occasionally.

When attempting to determine the number of trials required, it is preferred to overestimate the trials required. This insures that a more complete dataset is provided for analysis.

Analyzing and Presenting Learnability Data

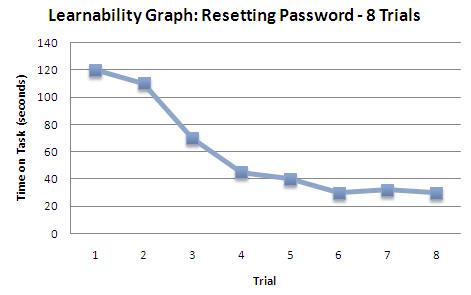

The common way to analyze and present learnability data is to focus on one performance metric [such as number of errors] for a specific task. It is also possible to aggregate the metric across all tasks in order to provide a much broader picture of the overall learnability of the application.

In this example, the metric chosen was time on task. Below is a hypothetical result graph for a time on task study.

The main aspects of the graph to consider are the following:

Line Slope: Also known as the learning curve, the slope of the line should ideally be flat and decreasing [indicating that both learning is taking place and efficiency is increasing].

Asymptote: Where the line 'flattens out'. This indicates how long it takes for the maximum level of efficiency to be acheived [how long it takes for a user to go from 'novice' to 'expert'].

Delta-Y: The difference between MAX(Y) and MIN(Y). This value indicates approximately how much learning is required for the user to reach maximum performance. If the gap is small, this indicates that the application is relatively easy to learn and use. If the gap is large, this indicates the application requires a great deal of time and effort for the user to become proficient in using the application.

User Satisfaction

However the user satisfaction with a human-computer interface can be seen as a special case of the user satisfaction with the whole system, it is the most important part. No matter how good the system is, with discouraging, hostile or even wrong interface in eyes of the users, it will never be used effectively. Even according to the international standard on usability[2], the three pillars of usability are efficiency, effectiveness and user satisfaction.

User satisfaction evaluation is always based on survey among the end users. It can be performed in form of interviews or questionaires. The group of questioned users should contain all the possible kinds of users (in all the possible senses), otherwise such evaluation will not be objective and future refinement can even make the interface worse. It is important that the group is motivated and willing to give the feedback.

If the survey is performed using questionaires, it is useful to keep in mind that users are unlikely to employ exactly the same words to describe concepts or experiences. For example, in a experiment in which secretaries were asked to inform other secretaries of editing functions to be performed on a given document[3], it has been found that different subjects used the same word to describe simple, well-known word processing operations only 10–20% of the time. Hence, it is better to suggest words to the users and explore just they opinion about them.

There are two question types[4], which are usually used – questions assesing the user's mindset using scales, and itemized questions, where user picks statements that he agrees with.

Examples of assesing user's mindset using scales

Example 1: Given statement. Choose the best fitting option

- Strongly agree

- Agree

- Neutral

- Disagree

- Strongly disagree

Example 2: I find the interface:

- Hostile 1 2 3 4 5 6 7 Friendly

- Vague 1 2 3 4 5 6 7 Specific

- Misleading 1 2 3 4 5 6 7 Beneficial

- Discouraging 1 2 3 4 5 6 7 Encouraging

Example 3: Choose better fitting word:

- Pleasing versus Irritating

- Simple versus Complicated

- Concise versus Redundant

Examples of itemized questions

Example 1: Choose the statements you can ygree with:

- I find the systems commands easy to use.

- I feel competent with and knowledgeable about the system commands.

- When writing a set of system commands for a new application, I am confident that they will be correct at on the first run.

- When I get an error message, I find that it is helpful in identifying the problem.

- I think that there are too any options and special cases.

Example 2: What is according to you necessary to improve

- Interface details

- Readability of characters

- Layout of displays

- Interface actions

- shourtcuts for frequent users

- Help menu items

- Task issues

- Appropriate terminology

- Reasonable screen sequencing

References

[1] Albert, William, and Thomas Tullis. "Performance Metrics." Measuring the User

Experience: Collecting, Analyzing, and Presenting Usability Metrics

(Interactive Technologies) (Interactive Technologies). San Francisco: Morgan

Kaufmann, 2008. 92-97. Print.

[2] ISO/DIS 9241-11(ISO, 1997)

[3] Furnas, G.W., Landauer, T.K., Gomez, L.M. and Dumais, S.T., 1983. Statistical semantics: analysis of the potential performance of keyword information systems. The Bell System Technical Journal 62 6, pp. 1753–1805.

[4] Poehlman, 2009. Lecture Notes, Software Engineering 4D03/6D03/Computer Science 4HC3, Fall 2009. McMaster University, p. 93-94.

[5] Lindgaard, G., Dudek, C., 2002. What is this evasive beast we call user satisfaction? Elsevier Science.