Web content filtering

From Computing and Software Wiki

This page is a brief overview of different types and technologies behind HTTP or Web Content Filtering.

The Internet is large and full of information. Some of this information is very useful. Much of it is not. Some of it can be dangerous to your computer. Some of it may be more dangerous than that.

In response to the danger, many organizations have employed various types of Content Filtering. Traditionally, these types of filters have been seen in primary and secondary schools along with libraries to limit children’s exposure to profanity. Many employers are now using these filters to enforce their Internet Code of Conduct.

This page discusses the technology behind HTTP or Web Content Filtering.

Contents |

Traditional Filtering

The Big Brother Approach

This simple content control scheme simply logs all the pages that a user visits. These logs can then be reviewed either manually or automatically by a system administrator.

This method is usually used in conjunction with a terms of use agreement. A user who is found in breach of the contract can be found and prosecuted.

Profanity in URL approach

The most basic form of content filtering is content based on an URL. It is so simple that many home routers even support this type of content blocking natively. This type of content blocking is done by disallowing a user to visit a URL with a given set of words or phrases in it.

As an example, assume a system administrator wants to block an online game such as Slime Volleyball. Since the word slime is conveniently not used very often, all URLS that contain the text string slime can be easily blocked. www.SlimeVolleyBall.net would be blocked simply because it contains the word Slime.

Although very simple, this type of blocking is ineffective in two ways:

- It frequently blocks sites that should not be blocked.

- Sites can get through the content filter easily by having an URL that does not explicitly mention what is on the site. Sites with codes or only partial phrases as URLs will get through this type of filter.

This approach could be called the profanity in URL approach because it is most often used to filter out URLs with profanity in them.

URL Lookup Approach

This type of content filtering also utilizes the URL of a page. However, the URL is not scanned for keywords. Rather, the URL is searched for in a Blacklist database. If found, the website will be blocked. This type of scanning is made popular by desktop clients such as NetNanny and server based solutions such as SquidGuard.

The blocking engine itself is powered by a blacklist database. Many products maintain their own blacklists. However, the quality of the content filtering is directly related to the quality of the blacklists. Therefore, third party companies have created very good commercially available blacklists. For Example, URLblacklist.com claims to maintain very good blacklists by manually checking each entry. [1]

HTML On Demand Document Scanning

The last type of simple filter is html document scanning. Instead of scanning the URL against a list of keywords, the HTML document or portions of it will be scanned.

This real time scanning can blacklist new documents that haven’t even had time to be incorporated into a URL blacklist. The challenge is that sites with dynamic content may be blocked incorrectly. I.e. If Google news had an article on Slime Volleyball, the Google news site would be blocked incorrectly for the day that story ran.

Another major problem with document scanning is that more and more websites are built on languages other than HTML. Many sites have Flash and Javascript content. In the future, on demand filtering will need to learn a wide variety of different programming languages.

HTTP Dataflow

Transparent Filtering

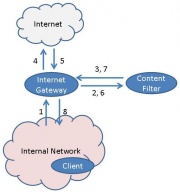

In a server based setup, content filtering needs to take place automatically. A typical packet flow looks like this:

- Client Computer sends out request for information.

- The Internet Gateway forwards this request to the content filter.

- The content filter will forward the initial request to the Internet Gateway

- The Internet gateway will now service the request as it comes from the Content Filter. As a rule, it will only forward HTTP traffic to the Internet from the Content Filter.

- The Internet will return data to the Gateway.

- The Gateway will forward data to the content filter. The Content Filter will scan the data.

- The Content Filter will return the filtered data to the Internet Gateway

- The Gateway will return the filtered data to the Client.

This setup is typically called a transparent setup as the client does not know that data comes from the content filter rather than from the internet itself.

Caching Add-on

Since all HTTP data will go through the content filter, many filters have the ability to cache data. The theory is that if many people on the same local network are browsing the internet, they probably need the same data. If this data is cached on the content filter, it can be returned very fast without even visiting the internet.

References

- About URLBlacklist <UrlBlacklist.com>

See Also

External Links

--Dasd2 12:53, 27 March 2008 (EDT)