Network layer

From Computing and Software Wiki

description:

Network layer solves the problem of getting packets across a single network. Examples of such protocols are X.25, and the ARPANET's Host/IMP Protocol.

With the advent of the concept of internetworking, additional functionality was added to this layer, namely getting data from the source network to the destination network. This generally involves routing the packet across a network of networks, known as an internetwork or (lower-case) internet.[7]

In the Internet protocol suite, IP performs the basic task of getting packets of data from source to destination. IP can carry data for a number of different upper layer protocols; these protocols are each identified by a unique protocol number: ICMP and IGMP are protocols 1 and 2, respectively.

Some of the protocols carried by IP, such as ICMP (used to transmit diagnostic information about IP transmission) and IGMP (used to manage IP Multicast data) are layered on top of IP but perform internetwork layer functions, illustrating an incompatibility between the Internet and the IP stack and OSI model. All routing protocols, such as OSPF, and RIP are also part of the network layer. What makes them part of the network layer is that their payload is totally concerned with management of the network layer. The particular encapsulation of that payload is irrelevant for layering purposes.

An example of an attack:

Denial of Service attack - SYN Flooding:

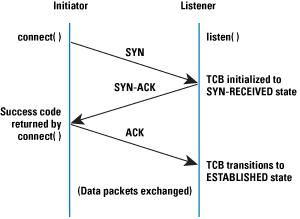

The basis of the SYN flooding attack lies in the design of the 3-way handshake that begins a TCP connection. In this handshake, the third packet verifies the initiator's ability to receive packets at the IP address it used as the source in its initial request, or its return reachability. Figure 1 shows the sequence of packets exchanged at the beginning of a normal TCP connection.

The Transmission Control Block (TCB) is a transport protocol data structure (actually a set of structures in many operations systems) that holds all the information about a connection. The memory footprint of a single TCB depends on what TCP options and other features an implementation provides and has enabled for a connection. Usually, each TCB exceeds at least 280 bytes, and in some operating systems currently takes more than 1300 bytes. The TCP SYN-RECEIVED state is used to indicate that the connection is only half open, and that the legitimacy of the request is still in question. The important aspect to note is that the TCB is allocated based on reception of the SYN packet— before the connection is fully established or the initiator's return reachability has been verified.

This situation leads to a clear potential DoS attack where incoming SYNs cause the allocation of so many TCBs that a host's kernel memory is exhausted. In order to avoid this memory exhaustion, operating systems generally associate a "backlog" parameter with a listening socket that sets a cap on the number of TCBs simultaneously in the SYN-RECEIVED state. Although this action protects a host's available memory resource from attack, the backlog itself represents another (smaller) resource vulnerable to attack. With no room left in the backlog, it is impossible to service new connection requests until some TCBs can be reaped or otherwise removed from the SYN-RECEIVED state.

Depleting the backlog is the goal of the TCP SYN flooding attack, which attempts to send enough SYN segments to fill the entire backlog. The attacker uses source IP addresses in the SYNs that are not likely to trigger any response that would free the TCBs from the SYN-RECEIVED state. Because TCP attempts to be reliable, the target host keeps its TCBs stuck in SYN-RECEIVED for a relatively long time before giving up on the half connection and reaping them. In the meantime, service is denied to the application process on the listener for legitimate new TCP connection initiation requests. Figure 2 presents a simplification of the sequence of events involved in a TCP SYN flooding attack.

A way to defense:

Both end-host and network-based solutions to the SYN flooding attack have merits. Both types of defense are frequently employed, and they generally do not interfere when used in combination. Because SYN flooding targets end hosts rather than attempting to exhaust the network capacity, it seems logical that all end hosts should implement defenses, and that network-based techniques are an optional second line of defense that a site can employ.

End-host mechanisms are present in current versions of most common operating systems. Some implement SYN caches, others use SYN cookies after a threshold of backlog usage is crossed, and still others adapt the SYN-RECEIVED timer and number of retransmission attempts for SYN-ACKs.

Because some techniques are known to be ineffective (increasing backlogs and reducing the SYN-RECEIVED timer), these techniques should definitely not be relied upon. Based on experimentation and analysis (and the author's opinion), SYN caches seem like the best end-host mechanism available.

This choice is motivated by the facts that they are capable of withstanding heavy attacks, they are free from the negative effects of SYN cookies, and they do not need any heuristics for threshold setting as in many hybrid approaches.

Among network-based solutions, there does not seem to be any strong argument for SYN-ACK spoofing firewall/proxies. Because these spoofing proxies split the TCP connection, they may disable some high-performance or other TCP options, and there seems to be little advantage to this approach over ACK-spoofing firewall/proxies. Active monitors should be used when a firewall/proxy solution is administratively impossible or too expensive to deploy. Ingress and egress filtering is frequently done today (but not ubiquitous), and is a commonly accepted practice as part of being a good neighbor on the Internet. Because filtering does not cope with distributed networks of drones that use direct attacks, it needs to be supplemented with other mechanisms, and must not be relied upon by an end host.