Streaming Media Technology

From Computing and Software Wiki

(→External links) |

(→External links) |

||

| Line 200: | Line 200: | ||

| - | --[[User: | + | --[[User:Chuh|Chuh]] 15:58, 9 April 2008 (EDT) |

Revision as of 19:58, 9 April 2008

"Streaming media is multimedia that is constantly received by, and normally displayed to, the end-user while it is being delivered by the provider."[1] Streaming media technolgy enables on-demand or real-time access to multimedia content via internet and allows users to play those contents without fully downloading them. After playing, there's no copy of played contents leaving on the receiving devices, which protects the copyright of the original mutimedia contents.

Contents |

Basic Knowledge

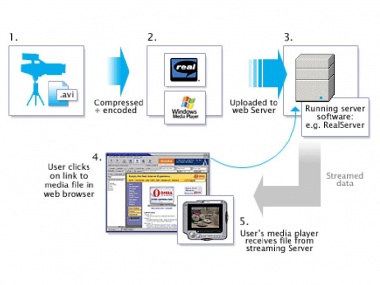

When the user requests to play a media object that is stored on a remote server, the data blocks are retrieved from the remote server over a network, and passes to the client for display.[3] There are ususaly two ways of doing that. First, the player doesn't play until the media object is completely downloaded on the client. Second, the player can start to play while "streaming" the media as long as enough data has been received - without waiting for the fully downloading, which is so-called the streaming media.

Compared with the way of fully downloading, the way of streaming takes many advantages. First, there's almost no waiting time for downloading. Second, no copies are stored on the client, which protects the copyright and reduces the storage requirement of the client. Third, real-time events becomes possible. However, streaming is always limited by the network conditions. If the speed of the network is very slow, the way of streaming won't work well and some data may lost during the transport process.

System Architecture

Assume a streaming media object S that contains n equal size blocks: S0, S1, ..., Sn-1, and is stored on a streaming media server. There are three important time variable needed to mention:

- The retrieval time of each block is a function of the transfer rate of the server.

- The delivery time of each block from the server to the client is a function of the network speed, traffic, and protocol used.

- The display time of each block is a function of the display requirments of each object and the size of the block. For example, if the display requirement of the object S is 3 Mb/s and the size of each block, Si, is 3 Mbytes, then the display time of each block is 8 seconds. [3]

While a client requests the display of a media object from the server, the server schedules the retrieval of the blocks and delivers these blocks to the client via network. The server stages a block of S(say Si) from the disk into main memory and delivers it to the client. After being received, Si is displayed. The server schedules the retrieval and the delivery of Si+1 before the completion of the display of Si. This process is repeated till all blocks of S have been retrieved, delivered and displayed. [3]

In order to ensure the continous display and of object S without any hiccups, block Si+1 must be available in the buffer of the client before the completion of the display of Si. [3]

P.S.: The architecture described here is entirely learned and paraphrased from the book Streaming Media Server Design, which is listed as reference [3].

Storage and Bandwidth

Storage

Streaming media storage size is a funtion of bit rate and length:

SIZE (Kbit) = BIT RATE (Kbit/s) * LENGTH (s).

Becuause the result is usually a very large number, people always use Mbyte to describe the size of the storage.

SIZE (Mbyte) = BIT RATE (Kbit/s) * LENGTH (s)/ 8,388.608

(since 1 megabyte = 8 * 1,048,576 bits = 8,388.608 kilobits)

Figure 2 shows the storage requirements for a 120 minute video clip digitized in different standard encoding formats.

Bandwidth

If the file is stored on a server for on-demand streaming and this stream is viewed by 100 people at the same time using a Unicast protocol, you would need:

250 kbit/s · 100 = 25,000 kbit/s = 25 Mbit/s of bandwidth

While using a Multicast protocol(which is commonly used), such a stream only need a 250 Kbit/s bandwidth. In order to play a streaming media object with no hanging, the bandwidth must be greater than or equal to the bit rate of the streaming meida object.

Data Compression

A 120 minute uncompressed video clip digitized in HDTV format at 1.2 Gb/s requires 1055 Gbyte of storage as shown in the previous section. Although modern storage devices can provide larger capacities, it's not economical to store streaming media object uncompressed(even temporarily). Although the network speeds are increasing, it is not economically feasible to handle the display of a large number of streaming media objects over existing networks. To solve these problem, we need to compress these objects, where a smaller streaming media object requires less storage and less network bandwidth. [3]

Run-length encoding

Run-length encoding is one of the simplest data compression techniques. It replaces continously occurrences of a symbol with the combination of the number of times it occurs and the symbol itself.

For example, the string

LLLOOOOOOOVVEEEEEEEEEE

can be compressed as

3L7O2V10E

The run-length code represents the original 22 characters in only 9.

This compression techinque is most useful when symbols occur in long runs but doesn't work well if most adjacent symbols are different.

Relative encoding

Relative encoding is also a simple data compressin techique. It replaces the actual value of an object O with the relative difference between O and the object before O.

For example, the series of pixel values

"50 130 257 135 70"

can be compressed as

"50+80+127-122-65"

In this example, the original values require 8 bits to represent while the difference requires only 7 bits to represent.

This compression techinque is most useful when adjacent objects are not greatly changing.

Huffman encoding

"Huffman encoding is a very popular compression technique that assigns variable length codes to symbols, so that the most frequently occurring symbols have the shortest codes." [3]

For example, if we want to encode a source of 5 symbols: a,b,c,d,e and the probabilities of these symbols are

P(a)=0.15, P(b)=0.17, P(c)= 0.25, P(d)= 0.20, p(e) =0.23, where P(a)+P(b)+P(c)+P(d)+P(e) = 1.

We represent a as 000, b as 001, c as 10, d as 01, e as 11 by using Huffman. The average length of the symbols is:

0.15*3 + 0.17*3 + 0.25*2 + 0.20*2 +0.23*2 = 2.32

Without using Huffman, we need 3 bit length to represent 5 symbols.

The Huffman encoding is very useful and not that simple as I described above. You can go to external links to find more about it.

Network Protocols

The Internet is not naturally suitable for real-time traffic since the shared datagram property. Moreover, Internet Protocol (IP) has some fundamental problems in transmitting real-time data. Therefore, some more protocols are developed to support the quality of service(Qos) for streaming media applications.[3]

RTP

The Real-time Transport Protocol(RTP) is a transport protocol for delivering audio, video and other real-time applications over the internet. RTP use timestamping, sequence numbering, and other mechanisms to provide end to end transport for real-time applications over the datagram network.[3] The information is stored in the header of a RTP packet.

The RTP header size is 96 bits.

0-1 indicate the version of the protocol.

2 indicates if there are extra padding bytes at the end of the RTP packet.

3 indicates if the externsion header is used.

4-7 indicate the number of source identifiers.

8 is used as the application level to indicate if the data carrying have relevance with the application.

9-15 indicate the payload format as well as encoding method.

16-31 are used to determine the correct order of packets(Sequence numbering).

32-63 are timestamping which will be used by the receiver to reconstruct the original timing(Timestamping).

64-95 indicate the synchronization source.

RTCP

Real-time Transport Control Protocol(RTCP) is the control protocol designed to work with RTP because RTP doesn't have error correction or flow control functionality.

There are several types of RTCP packets:

Receiver report packet contains quality feedback about the data delivery.

Sender report packet contains a sender information section.

Source description packet contains the information of sources.

Application specific packet contains the experiment of new applications and new features.

By using these packets, RTCP can monitor the QoS, identify the source , synchronize inter media and scale control information. [3]

RTSP

Real Time Streaming Protocol(RTSP) is a client-server presentation protocol which allows client to remotely control a streaming media server to operate some functionalities, such as play, fast-forward, fast-rewind, pause and stop. RTSP doesn't provide a way to transport data via internet, so it's always use RTP as its transport protocol.

Applications

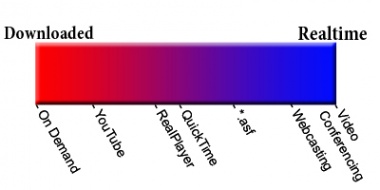

There are two main forms of streaming media:[4]

- On Demand: Typicllay requires the downloading of the media for short-term use and allows users to fast forward, pause, and rewind all media as they please.

- Video Conferencing: Real-time streaming meant for use as it happens and typically is only available when live.

There are five applications which are very popular now.[4]

- YouTube: Most similar to On Demand as users can download and access media at convenience. However, it's not true On Demand because users let content stream temporarily.

- Webcasting: Most similar to Video Conferencing as it's typically broadcast over the internet at a certain time and users typically cannot choose exactly what they want to watch. However, it's not true Video Conferencing because material can be prepared beforehand even if it's presented as live.

- RealPlayer: Functionally similar to YouTube but requires a platform to run it(with a built in explorer).

- Quicktime: Similar to ASF but with more potential for pause/fast-forward/rewind control.

- *.asf: A Windows Media format that combines the non-controllable streaming with the potential for On Demand access, but it requires a certain platform to run.

References

[1] Wikipedia, "Streaming Media", April 2008, "http://en.wikipedia.org/wiki/Streaming_media" .

[2] Sequence website, "http://www.sequence.co.uk/services/streamingmedia/howdoesitwork.html" .

[3] Dashti, Ali E.,Streaming Media Server Design, Prentice Hall, 2003

[4] Rosolak, Misha, "BuzzWords - Streaming Media", Software Engineering 3I03, McMaster University, Fall 2007.

[5] Lee, Jack, Scalable Continuous Media Streaming System, John Wiley & Sons Ltd, 2005

See also

External links

- streamingmedia.com - Streaming Media Industry News

- Streaming Media - Streaming Media Wiki Page

- TCP/IP_model - Protocols on the Five-layer TCP/IP Model

- Huffman Encoding - Huffman Encodeing Wiki Page

--Chuh 15:58, 9 April 2008 (EDT)